What You Didn’t Learn in Berkeley CS 188 — Part 1

Why isn’t there model-free policy iteration?

Berkeley’s CS 188 covers many important foundations of reinforcement learning. But there’s still a gap between what’s taught in that undergraduate course and the baseline expected if you’re working in the field.

Berkeley’s course covers, in no particular order:

Basic search algorithms

Constraint satisfaction problems (CSPs)

Minimax (with alpha-beta pruning)

Bayes nets

Markov Decision Process (MDP) definition

Policy iteration

Q-learning (and some variations)

This material is foundational, but the way it’s taught often feels fragmented. My goal here is to reorganize the basics into a clearer ontology that naturally sets up modern, continuous-control methods. The information hierarchy, I’d argue, could be sharper than what’s presented in CS 188.

This post is the first part in a series on reinforcement learning. Later posts will cover continuous control, off-policy methods, and RL for post-training.

CS 188 Recap: Markov Decision Process Definition

If you already remember the basics from 188, you can skip this section. Reinforcement learning is typically formalized as a Markov Decision Process (MDP). The MDP specifies the environment:

States S: possible configurations of the world.

Actions A: moves the agent can take.

Transitions P(s’ | s, a): probability of landing in s’ after taking action a in s.

Rewards R(s, a, s’): immediate payoff for (s, a) → s’.

Discount γ ∈ [0, 1): how much you value the future.

Those five define the problem itself. On the agent side, we define constructs that depend on the MDP:

Policy π: a mapping from states to actions.

Value function Vπ(s): expected discounted return from state s under π.

Q-function Qπ(s, a): expected return from (s, a) under π. If no π is present, then Q refers to the Q-values estimates that we have established so far.

A Clearer Ontology

CS 188 distinguishes “model-based” vs. “model-free” methods:

Model-based: assumes access to the transition probabilities and rewards (P and R).

Model-free: learns from sampled experience without ever observing P or R directly.

Another useful axis is “policy-based” vs. “value-based”:

Policy-based: directly solve for the optimal policy, then improve the value estimates by following that policy, repeating until convergence. (Many methods also use value estimates as baselines or for other purposes, e.g. actor-critic.)

Value-based: solve V or Q directly until convergence, using the greedy policy that maximizes the expected value of the next state:

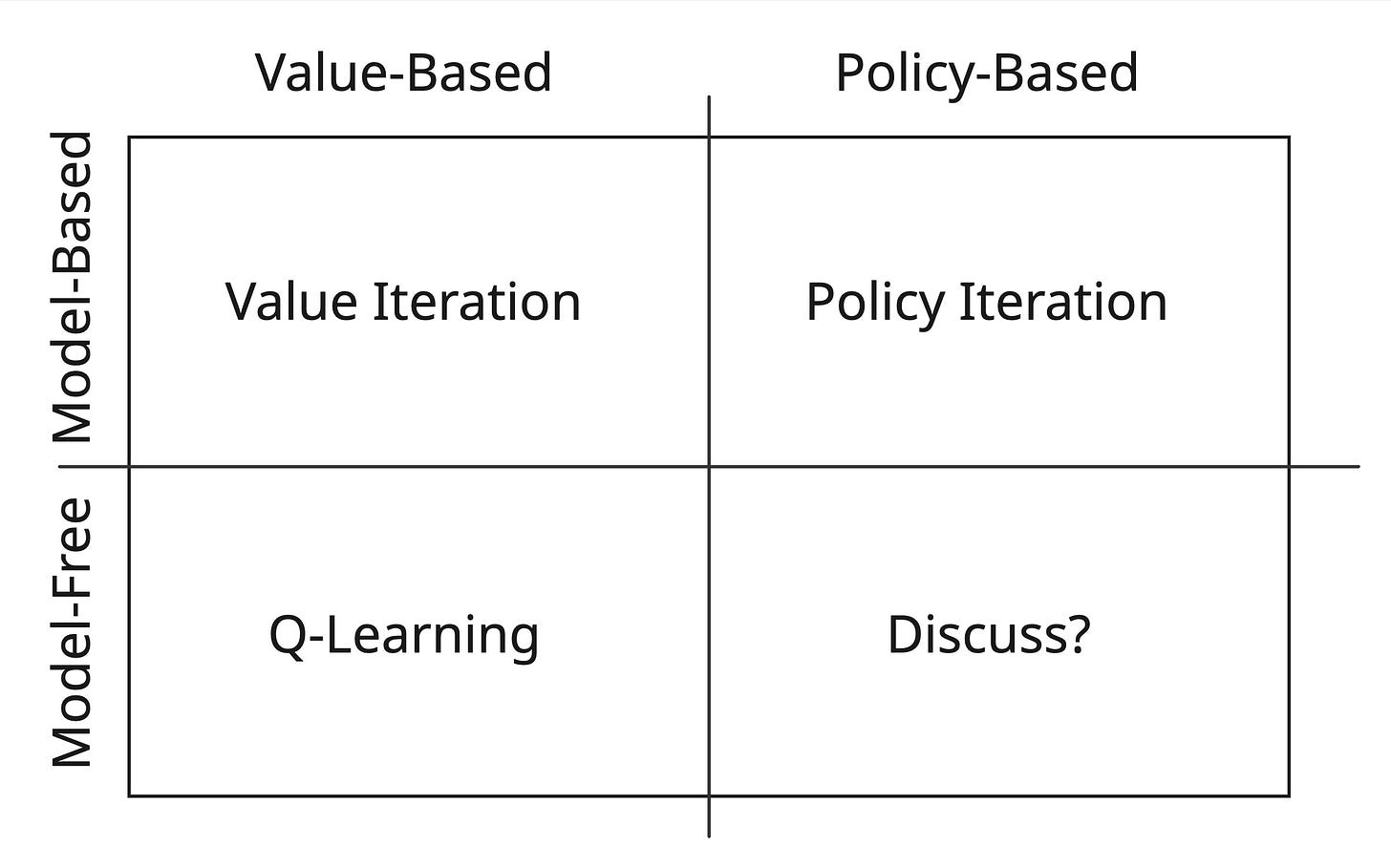

So we have two orthogonal axes: model-based vs. model-free, and value-based vs. policy-based. Together they give us a 2-by-2 view of classical RL methods:

This is the ontology I propose. The last quadrant, model-free policy iteration, is the most interesting, and we’ll work our way toward it.

Value Iteration

Value iteration iteratively updates the value function until convergence. When you know P and R, the “Bellman optimality update” is:

In other words, the value of a state is the maximum expected reward + discounted value of the next state. It’s implicitly summing an infinite discounted series. If V* is the fixed point of the update operator above, then:

Bellman Operator and Contraction

It makes sense that if we can solve for the fixed point V*, then we can define the optimal policy by greedily following whichever action maximizes the expected value of the next state. But how do we prove that the iterative process above actually converges?

To do so, we define the “Bellman optimality operator” (T) - the new value function if you perform a single iteration above:

Then for any two value functions V and W:

And since we didn’t specify which s:

where that infinity operator refers to the maximum distance between any two states. In other words, when you apply the Bellman update operator to any two value functions, the resulting value functions are closer together.

That matters because you can now show that T must converge to a single fixed point. The proof is simple. Assume that there are two possible fixed points. Then the update above moves them closer together, meaning that there cannot be any non-zero distance between the points. To show existence, we need to know that if you keep getting closer and closer to some limit, then that limit is still a valid value function. For finite state spaces this is obvious because value functions are just real vectors, and in Rn every Cauchy sequence converges. This is called the “Banach fixed point theorem”.

Iterative Expansion

Notice that after one application:

where a1 is the action recommended by the greedy policy given V. After two iterations:

Each step adds one more discounted term. Early actions can be wrong, but their contribution shrinks geometrically. Eventually you converge to V*. In practice, you can truncate after k terms.

Policy Iteration

Now the policy-based, model-based quadrant. Unlike value iteration, here we separate policy evaluation from policy improvement:

1. Policy evaluation: solve

Unlike value iteration, this is a linear system of equations that you can invert directly, since π is known.

2. Policy improvement: set

And repeat until convergence.

Proof of Convergence

The first step is showing that V(s) can only increase for any given s.

Define Tπ as the “Bellman expectation operator,” which updates a state-value function V in the “direction of” π:

(Note that V might not have been generated by following π.) By definition, Vπ = Tπ Vπ. Notice that if you apply Tπ’ enough times to any value function V, you’ll end up with Vπ’. If π’ is greedy w.r.t. Vπ, then

since after enough iterations of Tπ’, the policy becomes π’. The first inequality holds because we are using the same state-values, and only deviating if the new action leads to a strictly higher state-value. The second inequality is more subtle, but it relies on the monotonicity of the Bellman update operator. If you have two value functions where V(s) > U(s), then TπV(s) > TπU(s). This result comes directly from the Bellman operator definition if you compare each term of the expression. We’re just applying this property to the last two terms of the inequality to produce the next term, infinitely many times.

So each greedy improvement can only increase value. Since there are finitely many deterministic policies (|A||S|), this process must terminate eventually.

Model-Free Value Iteration (Q-Learning)

In the real world, we might not know P and R. We just have to start taking actions and figuring it out empirically. This leads us to the “model-free” methods. We can’t directly compute the fixed point from before, because it relies on knowing P and the true reward function R:

So instead we define a new fixed point, which relies on a value function for each state-action pair:

In other words, the value of a state-action pair is the expected reward, plus the discounted value of the next state-action pair you’d find yourself in.

Naively you might try approximating this by gathering observations:

But that doesn’t quite work. r is noisy - it varies based on which s’ you land in. You need an averaging scheme. The natural instinct might be to use a sample mean:

This works, but exploration is non-stationary. Q itself is changing, so early samples are inaccurate. Instead, we use an exponential moving average (EMA):

Iterate, slowly lower alpha, and the process converges. Each update adds another discounted term, just like value iteration.

Proof of Convergence

This is trickier than proving convergence for the model-based methods, because now it’s stochastic. The standard proof defines each update as:

where M is zero-mean noise. Ignoring noise, this looks like:

Then they define a continuous version of Q called q, which is respect to a new variable tau:

In the limit as alpha goes to 0,

This ODE is used to demonstrate convergence. The full analysis is beyond the scope of this post.

Model-Free Policy Iteration

Now let’s try to apply the same methodology to policy iteration, just as Q-learning stochastically approximated value iteration.

In principle, we could evaluate Qπ by sampling, then improve π, and repeat. But exact evaluation by sampling is very slow and requires waiting for convergence each round. Worse, unlike the model-based case, you can’t invert the system of equations for Vπ. So the advantages of policy iteration vanish in the model-free setting.

A practical compromise is approximate policy iteration: run only k evaluation steps before improving the policy. This weakens convergence guarantees. With k=1, you get a popular method called SARSA.

SARSA’s policy is to follow Q most of the time, except for epsilon probability where you take a random action, called an “epsilon-greedy policy”:

We update with:

This is called “TD(0)”, or temporal-difference learning with lambda equals 0. Temporal-difference learning allows us to extend further. Instead of just one-step lookahead, you can mix multiple n-step returns Gn:

In practice, computing this infinite sum is approximated dynamically. Lots of simple algebra goes into deriving the recursion (eligibility traces), but I’ll skip it here.

Wrap-Up

This is why you don’t really see a neat “model-free policy iteration.” Without P and R, exact evaluation is gone, and requiring near-converged sample evaluation before every improvement is prohibitively inefficient.

Is that all we need to know for reinforcement learning? Unfortunately the methods above don’t work in many domains. They require not “too big” of a state-action table, and there’s no way to handle continuous action spaces. I’ll cover how you can solve these more complex scenarios in my next post.

For a comprehensive overview that overlaps CS 188 material and this post, see Lilian Weng’s excellent writeup.